2023-2024

Building an AI-powered distress detection system that cut counselor review time by 60%

Role

Outcome

Reduced counselor review time by ~60%

All pilot counselors extended usage and referred peers

Contributed to expansion into 12+ school districts by the next term

Context: Counselors decide whether Palo succeeds

Palo is an AI-powered decision-support platform used by school counselors across 12+ US districts. Counselors review behavioral signals, risk indicators, and contextual student data to make timely, high-stakes decisions.

The existing workflow was fragmented and cognitively heavy. Information was scattered across screens, state changes were unclear, and decision paths were not structured for speed or confidence. The challenge was to simplify complex signals into a clear, structured, and trustworthy operational flow without oversimplifying risk.

| The goal wasn’t to show more data – it was to help counselors act faster.

Designing with real stakeholders: Pilots with school counselors

The challenge: When more data slowed decisions

Palo’s interactive curriculum captured nuanced student behavior through:

Mood check-ins

Scenario-based choices

Written reflections

This created a rich picture of student well-being – but also surfaced hundreds of fragmented signals across classrooms and weeks.

The core challenge

| How might we surface meaningful risk signals from rich behavioral data – without adding cognitive load or slowing counselors down?

First attempt: A dashboard counselors didn’t return to

Our first response was a comprehensive dashboard that visualized emotional trends, engagement levels, and behavioral patterns across time. It looked informative – but it didn’t work.

After initial logins, counselor usage dropped sharply. The feedback was consistent:

Too many charts

Too much interpretation

Too much time required

What failed

| We optimized for completeness instead of counselor intent.

Counselors didn’t want another system to analyze. They wanted clarity that fit into an already overloaded day.

Design principle: Time is the real constraint

In high-stakes environments, time – not data – is the scarcest resource.

Counselors didn’t lack information. They lacked the time and cognitive space to interpret it. This led to a clear design principle that guided every decision that followed:

| If an insight can’t be understood and acted on in seconds, it doesn’t belong in the system.

This shifted our focus from visualizing data to reducing decision effort.

Fail fast: Testing insight delivery inside counselor workflows

Before redesigning the dashboard, we needed to validate one question:

| Could we surface meaningful signals without asking counselors to change how they worked?

To shorten feedback loops, we moved insight delivery directly into counselors’ existing workflow – their inbox.

I partnered with our researcher and SEL expert to send bi-weekly insight reports via email, iterating weekly based on counselor feedback. This allowed us to test:

Signal prioritization

Clarity of language

Trust in surfaced insights

What worked

| A simple, prioritized list of flagged students – with just enough context.

What didn’t:

Charts that required interpretation

School-level summaries that delayed action

Designing the system: Flags before frameworks

Once we validated that counselors responded best to prioritized signals, the challenge became designing a system that could reliably surface those signals at scale.

The goal wasn’t to explain student behavior. It was to alert counselors when attention was needed – quickly, safely, and with enough context to act.

We designed an AI-assisted pipeline that transformed raw behavioral data into distress flags, rather than scores or predictions.

How the system worked

Student inputs from Palo’s interactive curriculum – mood check-ins, scenario choices, and written reflections – were:

Tokenized and anonymized

Processed using researcher-defined prompts

Analyzed for unusual emotional or behavioral patterns

When a pattern crossed a defined threshold, the system generated a distress flag, adding the student to a counselor watchlist for review.

AI design constraints

From the outset, we set clear boundaries on what the system should not do:

No scores – to avoid false precision

No automated conclusions – counselors retained judgment

No loss of context – surfaced signals always linked back to student responses

These constraints helped build trust and ensured the system supported – rather than replaced – counselor decision-making.

Progressive disclosure: Start with names, earn depth

With the system in place, the UX challenge was deciding how much information to show – and when. Counselors needed to:

See risk quickly

Understand context when necessary

Avoid cognitive overload by default

We designed the interface around progressive disclosure, revealing information only as counselor intent deepened.

| The system should follow counselor intent, not data hierarchy.

How information unfolded

Key idea was clarity by default – and depth on demand.

Level 1 – Watchlist

A simple, prioritized list of flagged students. No charts. No scores. Just names that needed attention.Level 2 – Student context

On selection, counselors saw recent emotional signals and excerpts from student responses.Level 3 – Patterns over time

Deeper trends and correlations were available only when counselors chose to explore further.

Design-engineering tradeoffs under real constraints

As the system moved closer to production, design decisions were shaped by real-world constraints inside schools, not ideal conditions.

Most counselors accessed Palo on low-bandwidth Chromebooks, often on unstable networks. This directly impacted what the interface could reliably support.

Rather than forcing visual complexity, we made deliberate tradeoffs to protect performance, clarity, and trust.

Tradeoff

Key tradeoff we made:

Removed complex histograms

Multi-parameter charts added processing overhead and slowed page loads on school devices.Dropped weekly mood aggregation views

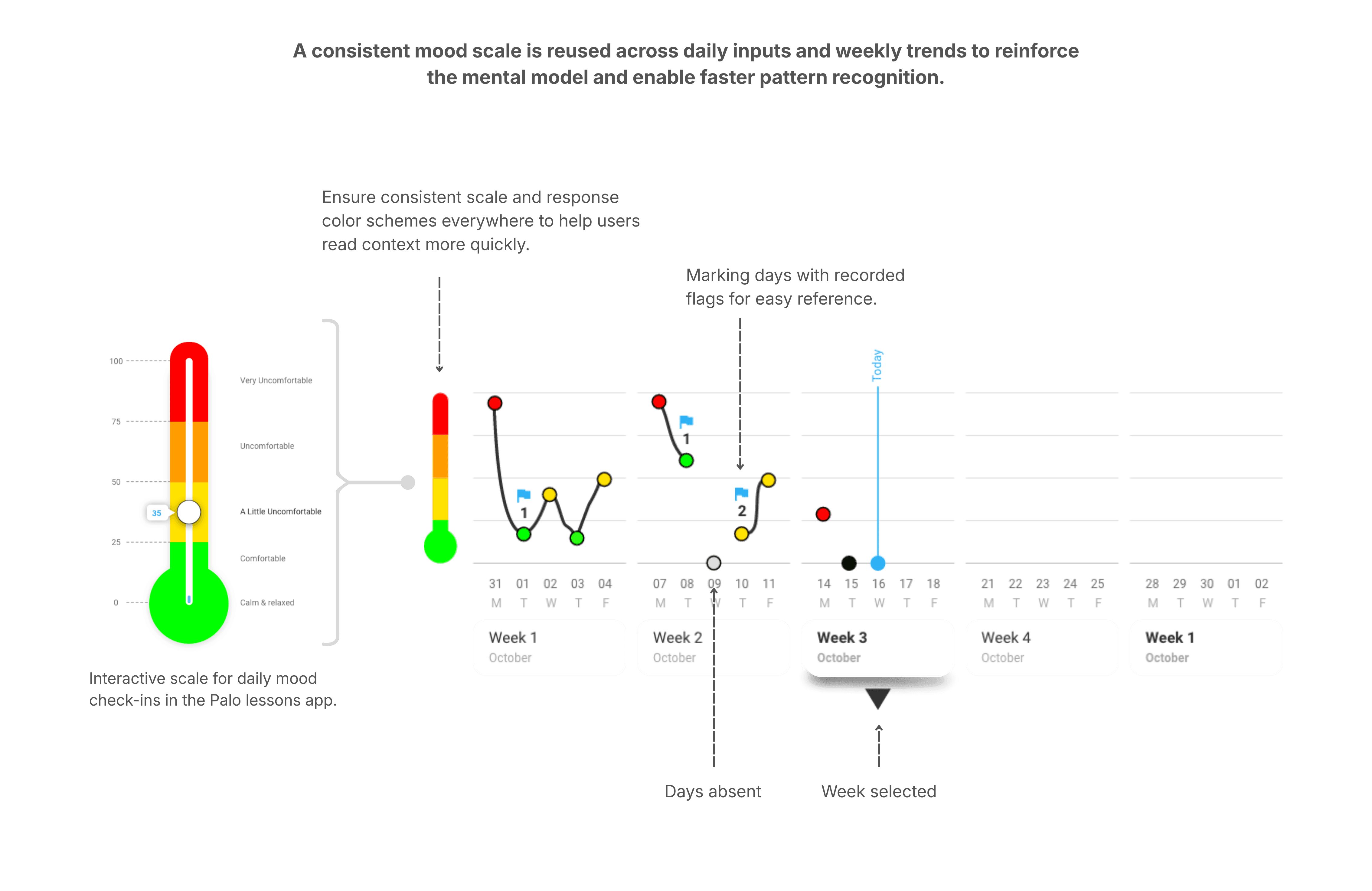

Aggregated trends were useful in theory, but delayed access to urgent, student-level signals.Replaced precision charts with a feeling scale

A simpler scale aligned better with how counselors discussed emotional states with students.

| We optimized for reliability and clarity over visual density – because broken insight is worse than missing insight.

The final experience: Clear signals, confident action

The final dashboard experience centered on speed, clarity, and trust.

Counselors landed on a prioritized watchlist showing only students who needed attention. From there, they could quickly review context, see recent emotional signals, and decide when and how to intervene – without navigating complex analytics.

The system supported counselor judgment instead of replacing it.

What counselors could do quickly

Identify at-risk students in seconds

Review contextual excerpts from student responses

Understand emotional patterns without interpretation overhead

Add notes and track follow-ups over time

Every interaction was designed to reduce thinking time and increase confidence in action.

Interaction & Visual Design Decisions

The interaction design focused on reducing cognitive load, reinforcing mental models, and helping counselors move from signal to action with confidence.

Impact

The redesigned system significantly reduced the effort required for counselors to identify and act on student well-being concerns.

~60% reduction in counselor review time

100% of pilot counselors extended usage beyond the pilot period

Peer referrals from counselors to other schools

Contributed to expansion into 12+ school districts by the following term

More importantly, counselors reported spending less time searching for concerns and more time supporting students who needed attention.

Insights

What this taught me

In AI-driven products, legibility matters more than sophistication. If users can’t immediately understand why something surfaced, they won’t trust it.

Designing for action is fundamentally different from designing for insight – it requires ruthless prioritization and restraint.

In high-stakes contexts, users don’t want automation. They want support they can trust.

These principles now guide how I design systems where accuracy, ethics, and usability intersect.

More case studies

Nagent AI

Designing a visual AI agent builder for non-technical users

A systems-led exploration of reducing cognitive load in agentic AI creation

TaccoMacco Mobile App

Building a mobile reading platform – and learning why products fail

Product lessons on habit formation and distribution challenges.