2025-2026

Designing a visual AI agent builder for non-technical knowledge workers

Internal alpha / pilot with enterprise users and early creators.

Role

Founding Product Designer, working closely with the CEO, CTO, a design advisor, engineers, and the marketing team (5–6 members) to design and evolve a no-code visual AI agent builder over an 11-month period (ongoing).

I owned the product end-to-end; from product framing and UX to system design and prototyping – while collaborating with early creators to validate workflows through internal alpha and enterprise pilots.

Context

Agent builders promise leverage, but most are designed for engineers – not the people expected to use them.

Nagent set out to enable non-technical knowledge workers (marketers, advertisers, managers) to create and deploy AI agents without writing code.

The core challenge wasn’t access to models or tools. It was cognitive load.

Existing tools:

required heavy setup before value

exposed too many choices upfront

assumed technical understanding

This created friction at the exact moment curiosity should turn into momentum.

Core design principle: Creation before configuration

Before designing interfaces, I aligned the team on a first-principles question:

How does a non-technical user think while creating something they don’t yet understand?

That led to two early decisions:

Reject configuration-first, form-heavy flows

Avoid chat-only creation as the primary mental model

Instead, we anchored on a visual builder – not as a UI choice, but as a cognitive scaffold for exploration.

Phase 1 – When simplicity became a constraint

Goal: Make agent creation feel unintimidating.

The first approach explored a lego-like system:

Grid-based canvas

Simple rectangular blocks with icon-only representation

Limited node types (input, model, tool, output)

Clear arrows and linear flows

What worked

Very easy to start

Low intimidation for first-time users

Clear for simple agents

What broke

As workflows grew:

Fixed grids felt constraining

Nodes hid intent behind modals

Users forgot what they had built

Cognitive load shifted from building to remembering

User testing made the issue clear:

| The system optimized for simplicity, not expressiveness.

Phase 2 – Reframing the mental model

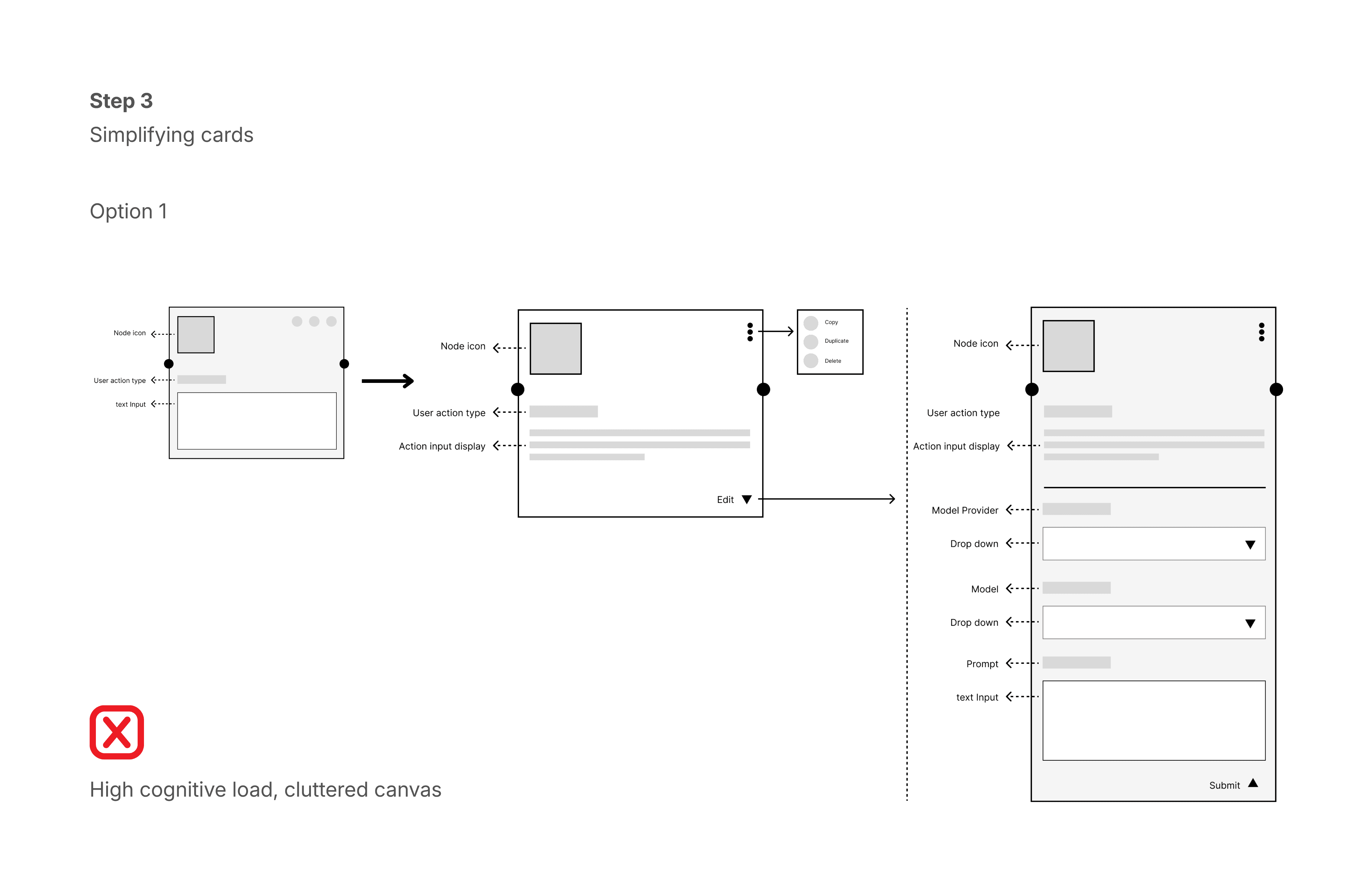

Rather than polishing Phase 1, we changed the foundation. The shift was from blocks that represent components to cards that communicate intent

Key system decisions

Cards over rigid blocks

Cards surface the essence of each step – prompts, inputs, logic – enabling users to understand workflows at a glance.

| Process and wireframes

| Final design

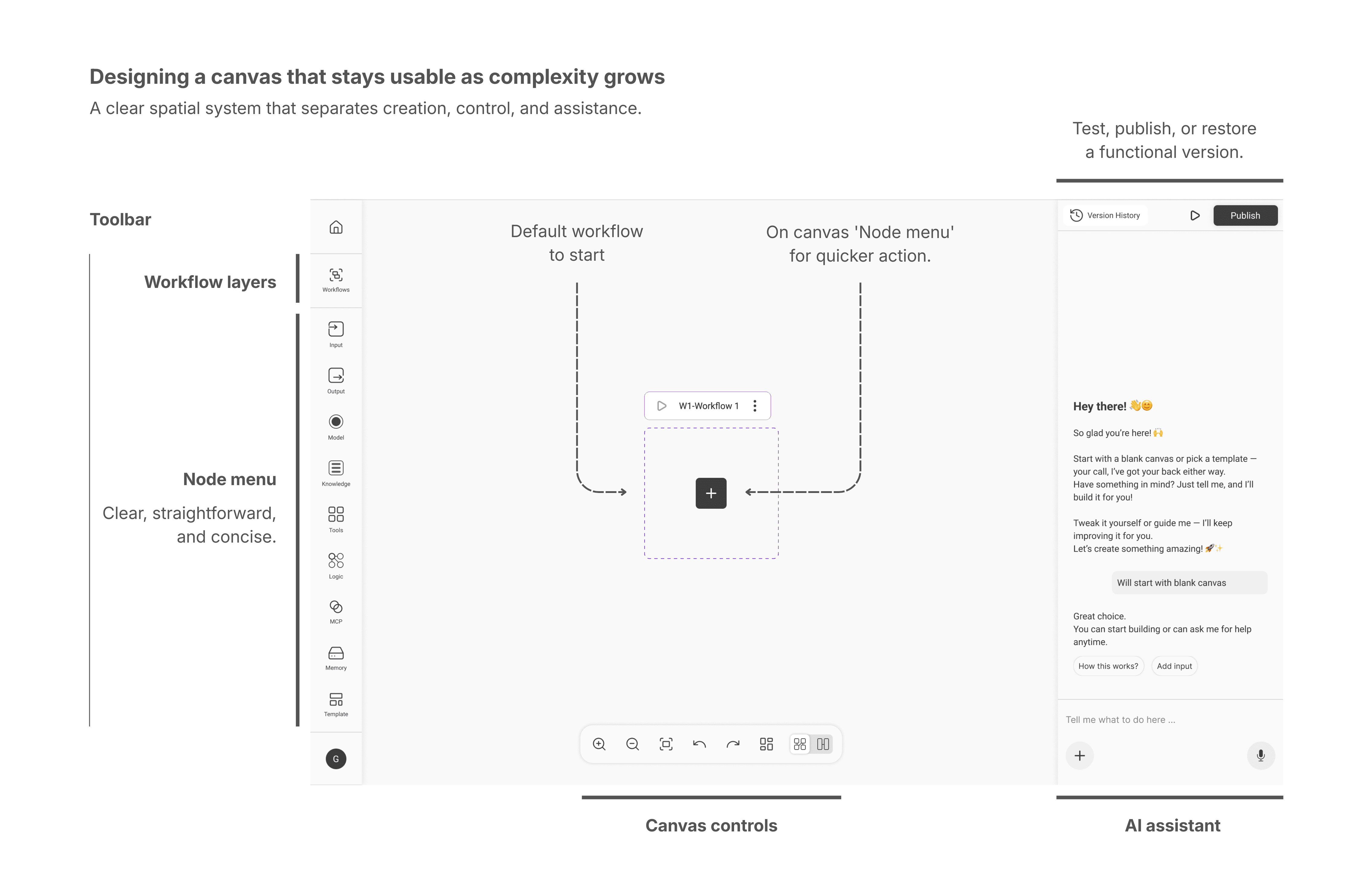

Free-form canvas

No fixed grid. Users can drag, connect, and reorganize freely, reflecting how real thinking happens: spatially and non-linearly.

Multiple entry paths

Blank canvas for exploration

Templates for familiarity

A co-pilot for guided creation and recovery when stuck

Different confidence levels demanded different starting points.

Orphan cards

Users can remove steps from a flow without deleting them, allowing experimentation without fear. This required architectural changes but reflected a core belief:

| Creation is exploratory, not transactional.

Exploration without penalties: Detached cards are clearly flagged, yet remain harmless – allowing users to experiment without disrupting the workflow.

Highlight only when it matters: Detached cards stay visually neutral unless they impact an active workflow.

Inline error handling

Errors surface where they occur, with AI-driven suggestions and one-click fixes – keeping users in flow rather than breaking momentum.

Interaction & Visual Design Decisions

The visual and interaction design focused on making complex AI systems understandable, controllable, and safe for non-technical users through clear mental models and progressive disclosure.

Designing against existing constraints

Nagent already had a form-based builder that required upfront definitions like agent name and purpose.

I pushed back on this model.

For early users, upfront commitment:

increased anxiety

reduced experimentation

raised the entry barrier

Working with engineering, we shifted toward an open-ended creation model, where structure emerges through use rather than being demanded upfront.

This was a significant system change – but essential for creator adoption.

Current limitations

Nagent is still evolving, and several challenges remain open:

Context-aware model suggestions

The system currently lists models rather than inferring the right one based on node intent.Multi-agent orchestration

Multiple workflows exist, but higher-order agent collaboration needs clearer abstractions.RAG, memory, and AI conditionals

These are core to powerful agents, yet still require better mental models for non-technical users.

These are not implementation gaps – they are unsolved design problems in a fast-moving domain.

What this case study demonstrates

Nagent isn’t a finished product. That’s the point. It demonstrates:

First-principles thinking in an emerging AI domain

Willingness to abandon early designs when mental models break

Design leadership across UX, system architecture, and product framing

Comfort designing systems before outcomes exist

What this taught me

Mental models are key

In AI tools, mental models matter more than features

Reducing cognitive load often means removing commitment, not adding guidance

Systems that respect exploration scale better than rigid workflows

Designing for non-technical users requires restraint, not oversimplification